Next.js SEO Optimization for Developers and SEO Experts: Part 1 - Crawling, Indexing, and Rendering Strategies

Search Engine Optimization (SEO) is all about boosting your website's visibility in search results, ultimately leading to more organic traffic and potential customers. It's like building a ladder to the top of Google's search engine kingdom!

But SEO isn't just about throwing keywords around—it's a multi-faceted strategy with three main pillars:

Technical SEO: Ensuring your website is easily crawled and indexed by search engines like Google. Think of this as building a sturdy, crawlable bridge to your website.

Content Strategy: Creating relevant, high-quality content that targets specific keywords and engages your audience. This is the heart and soul of your website, the treasure at the top of the ladder.

Off-page SEO: Building trust and authority through backlinks from other websites. Imagine these as sturdy ropes connecting your website to other parts of the online kingdom.

Next.js, a popular React framework, offers plenty of tools and flexibility to excel in all three SEO areas. But mastering its SEO requires delving deeper than surface-level techniques. This two-part series dives into advanced strategies for optimizing your Next.js website for search engines, focusing on the technical aspects that software developers and SEO experts can leverage to climb the SERP ladder.

Crawling and Indexing: Building the SEO Path for Crawlers

Understanding how search engines like Google crawl and index your website is crucial for optimization. Googlebot, the ubiquitous crawler, navigates through links, analyzes content, and determines which pages deserve a place in the search results. Let's optimize your site to be a welcoming haven for Googlebot:

HTTP Status Codes: Use meaningful codes like 200 for successful pages, 301/308 for permanent redirects, and 404 for truly missing content. Avoid frequent 404s, as they can signal poor maintenance and harm rankings.

Robots.txt: This file acts as a map for crawlers, telling them which pages to access and avoid. Craft a clear and concise robots.txt to guide crawlers efficiently. For Eg:

//robots.txt # Block all crawlers for /about User-agent: * Disallow: /about # Allow all crawlers User-agent: * Allow: /

XML Sitemaps: Think of these as detailed blueprints for your website. Provide precise information about your pages and their relationships, helping crawlers discover and index important content faster. Consider dynamic sitemaps for automatically updating as your website evolves.

Meta & Canonical Tags: Use meta robots and canonical tags strategically. Robots tags guide indexing (noindex to hide a page) and crawling (nofollow to prevent link following). Canonical tags suggest the preferred version of duplicate content to avoid confusion.

<meta name="robots" content="noindex,nofollow" /> //or <meta name="robots" content="all" />

Rendering Matters: Choosing the Right Path for Performance and SEO

Next.js offers various rendering options with different SEO implications:

Static Site Generation (SSG): The king of SEO! HTML is pre-rendered at build time, making it lightning fast and easily indexable. Think of it as serving pre-baked cookies to hungry crawlers.

Server-Side Rendering (SSR): Dynamic pages generated on the server for each request. Great for interactive features, but slightly slower loading can affect SEO slightly. Imagine baking fresh cookies on demand, still delicious but takes a bit longer.

Incremental Static Regeneration (ISR): A hybrid approach, refreshing static pages automatically when specific data changes. Offers a balance between SEO benefits and dynamic content flexibility. Consider it as updating the cookie recipe occasionally to keep things fresh.

Client-Side Rendering (CSR): Content rendered in the browser using JavaScript. While appealing for interactivity, potential indexing issues can hinder SEO. Think of serving raw dough to crawlers - they might have trouble understanding it without the baking process.

For optimal SEO, prioritize SSG or SSR for most pages and reserve CSR for highly interactive sections. Remember, rendering choices affect both user experience and search engine visibility.

URL Structure: The Roadmap to Clarity

Your website's URL structure acts as a roadmap for users and search engines. Craft URLs that are:

Semantic: Use words instead of numbers or IDs, like

/blog/seo-best-practicesinstead of/article/123.Consistent: Follow logical patterns across pages, making navigation intuitive.

Keyword-focused: Include relevant keywords, but avoid excessive stuffing or parameter-based structures.

A clear and informative URL structure enhances both user experience and SEO, guiding both humans and crawlers to the right destination.

Metadata: Describing Your SEO Treasure Trove

Think of metadata as a concise summary of your page's content, attracting potential visitors and guiding search engines. Key elements include:

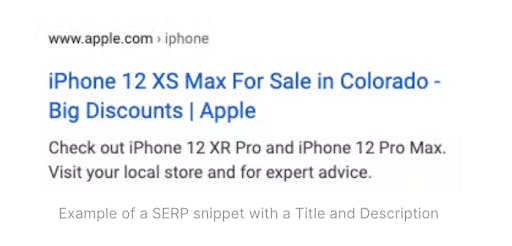

Title Tag: The most crucial SEO element, summarizing your page content and influencing click-through rates. Make it enticing and relevant, incorporating targeted keywords. Eg:

<title>iPhone 12 XS Max For Sale in Colorado - Big Discounts | Apple</title>

Description Tag: While not directly impacting rankings, it can influence clicks in search results. Craft a compelling description that expands on your title tag and entices users to click. Eg:

<meta name="description" content="Check out iPhone 12 XR Pro and iPhone 12 Pro Max. Visit your local store and for expert advice." />

Open Graph Protocol: Make your content social media-friendly with images and snippets. Imagine this as the beautifully decorated box your SEO treasure comes in, catching attention on social platforms. To add Open Graph tags, just set the

propertyattribute in the meta tags within the Head component.Structured Data: This detailed language helps search engines understand your content on a deeper level. Imagine providing a detailed ingredient list and instructions with your cookies, allowing even picky search engines to appreciate their deliciousness.

On-Page SEO: Optimizing the Content Within

On-page SEO focuses on optimizing the actual content displayed on your pages. Think of it as polishing the inside of your SEO cookie:

Headings: Use descriptive headings like <h1> and <h2> to structure your content, making it easy for users and search engines to navigate.

Internal Linking: Connect your pages with relevant links, improving discoverability and page authority. It's important for links to use the href attribute for PageRank calculations. Luckily, Next.js has this cool Link component that lets you handle smooth client-side transitions between routes.

So far, we've tackled the three key areas for boosting SEO. In the next blog, we will be diving into the second half, covering Performance, Web Vitals, and throwing in some Advanced Strategies. Stay tuned and keep exploring!